What “the edge” means for telcos

Dimitris Mavrakis, Senior Research Director, manages ABI Research’s telco network coverage, including telco cloud platforms, digital transformation, and mobile network infrastructure. Research topics include AI and machine learning technologies, telco software and applications, network operating systems, SDN, NFV, LTE diversity, and 5G.

Dimitris Mavrakis, Senior Research Director, manages ABI Research’s telco network coverage, including telco cloud platforms, digital transformation, and mobile network infrastructure. Research topics include AI and machine learning technologies, telco software and applications, network operating systems, SDN, NFV, LTE diversity, and 5G.

Despite the network edge being a hot topic for the past ten years, it is still difficult to pin down to a specific location, technology, or vendor ecosystem.

The edge means different properties, benefits, and requirements for different companies in the value chain. For a chipset manufacturer, the edge is the device itself. For a hyperscaler, however, the edge is a data center deployed in a metropolitan area.

Between these two opposites lies the domain of the telecom operator, who has been deploying a distributed connected computing platform to power their 4G, 5G, DSL and FTTx networks for more than a decade.

The edge continuum

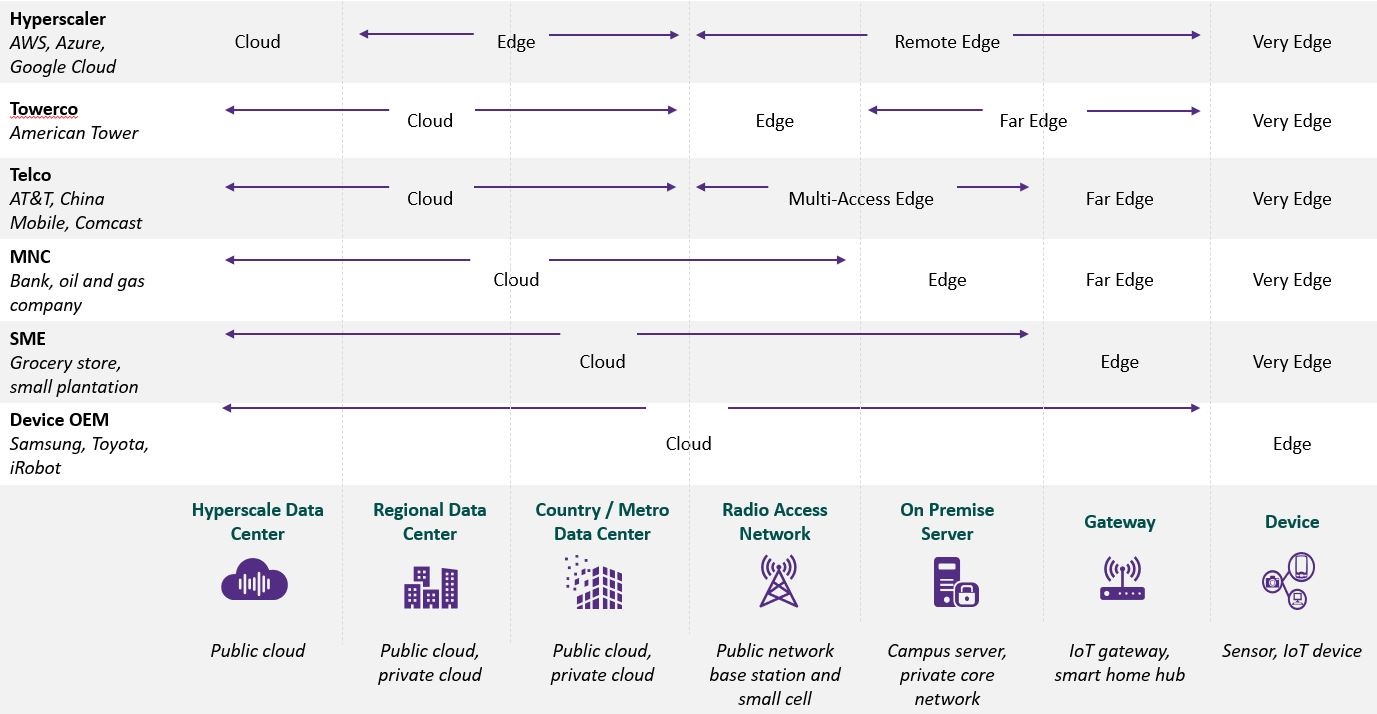

ABI Research has coined the term “edge continuum” to cover how the edge means different things to different organizations. The following diagram summarizes varying edge locations, and how each company addresses them.

As illustrated above, placing a strict definition of the edge is not possible, especially when enterprises – who are the most important end user for edge services – will have different demands.

For example, a large manufacturing company will require on-premises edge services to ensure that Operational Technology (OT) data remains within its physical boundaries. On the other hand, a retail Small-to-Medium Enterprise (SME) will not be able to afford an on-premises edge deployment and will likely consume edge services rather than deploy its own infrastructure. This fragmented approach is driven by different players, each with its own sphere of influence.

It's no secret that hyperscalers are currently driving the evolution of edge services and infrastructure. For example, Amazon Web Services (AWS) is deploying edge infrastructure in carrier networks (AWS Wavelength) and even in enterprise premises (AWS Outposts). Google and Microsoft have similar strategies and products.

However, these companies cannot distribute their processing capabilities without making significant real estate investments, deploying infrastructure, and managing these distributed edge servers. For this reason, hyperscalers are partnering with telecom operators to place their servers in existing passive infrastructure.

Hyperscalers have already attracted developer interest with their infrastructure, platforms, and software expertise and are now extending this to the edge. On the other hand, telecom operators already own edge locations but have not yet attracted developer or enterprise interest. The combination of hyperscaler and telecom operator strengths will likely create a thriving edge ecosystem that will centralize processing capabilities – but also offer edge services for low-latency capabilities – to address enterprise requirements.

It's no secret that hyperscalers are currently driving the evolution of edge services and infrastructure. However, these companies cannot distribute their processing capabilities without making significant real estate investments, deploying infrastructure, and managing these distributed edge servers. For this reason, hyperscalers are partnering with telecom operators to place their servers in existing passive infrastructure. - Dimitris Mavrakis, Senior Research Director, ABI Research

Centralized vs distributed processing

Cloud computing democratized processing and storage capabilities. It also centralized these application payloads in the traditional cloud computing model, which depends on a few large data centers. This has spurred application innovation with the abundance of processing power. In recent years, however, Artificial Intelligence (AI) and Machine Learning (ML) have created the need for a more distributed, architectural approach to processing. In this domain, different instruction sets based on CPU, GPU, FPGA, DSP, ASICs, or other hardware accelerators will be used to execute tasks that can be AI-based, connectivity-based, or application processing-based.

These processing payloads will likely be distributed and placed near the enterprise that requires their use. Moreover, the traditional training model for AI/ML algorithms requires a large amount of data to be collected. This will likely become unsustainable when AI/ML applications proliferate and become much larger and more complex. This is another big driver for distributed processing in the form of edge computing.

A combination of centralized and distributed processing will likely come to dominate, and telecom operators will play a key role distributing these payloads to the edge of their networks. It is imperative that they prepare their infrastructure for the edge cloud and the distribution of these processing payloads. We’ve already seen how edge computing in telecom networks can enable new types of use cases.

The present and future of the telco edge

The pandemic has shown that telecom networks are vital in consumer and enterprise domains. They have been key pillars keeping the global economy going at times when quarantine measures were enforced across the globe. In fact, the pandemic has accelerated many facets of the telecom network evolution and edge computing is one of them. For example, China Mobile applied edge computing when its 5G network was used to measure the temperature of citizens through connected cameras and locate individuals running fevers.

However, the extreme distribution of processing capabilities comes with its own challenges. Telecom operators will soon need to manage hundreds, or even thousands of nodes which make up a heterogeneous processing environment. These networks will require centralized orchestration and automation since manual control and maintenance will not be possible.

To address these needs and challenges, telecom operators must strategize for the edge cloud by upgrading their infrastructure to support more applications and payloads while simultaneously modernizing their orchestration and network automation tools to manage this new network domain. By doing so, they will become central to the new edge-computing ecosystem and valuable partners to hyperscalers and enterprises.