Latest Articles

Article

What service providers really want from autonomou ...

Ciena and Blue Planet leaders unpack the operational and financial outcomes driving the shift to AI-driven autonomy.

Article

What is scale across? The optical innovations ena ...

As AI training scales beyond the limits of a single data center, a new architectural model is emerging: scale across. In this blo ...

Article

1.6T quantum-safe encryption: Protecting critical ...

High-speed meets high-security. Ciena’s Paulina Gomez breaks down how WaveLogic 6 Extreme delivers the industry’s only 1.6Tb/s qu ...

Article

Solution validation is more than testing. It is a ...

In an era of AI-scale traffic and constant network change, hoping a solution will work is no longer enough. Ann Cantrell explains ...

Article

Network management and control without the IT inf ...

As networks scale faster and AI-driven demands intensify, legacy on-premises management infrastructure is becoming a hidden const ...

Article

Navigating NIS2: Ciena's commitment to cybersecur ...

The EU’s NIS2 Directive represents a significant step forward in strengthening cybersecurity across critical infrastructure and e ...

Article

Dark, lit, or hybrid—if your network can’t move d ...

More leaders are asking: Should we build or buy our network core? Kevin Sheehan, Ciena’s Chief Technology Officer of the Americas ...

Article

What does DCI really mean today? From scale acros ...

In this blog, Ciena’s Helen Xenos explores the requirements behind each DCI deployment and how the right technology helps make th ...

Article

Empowering youth through digital inclusion in Mon ...

Discover how Ciena and West Island Community Shares are expanding tech access and digital confidence for underserved youth across ...

Article

Cut deployment time from weeks to hours with Navi ...

Historically, optical network deployments have been manual, error-prone, and time-consuming, potentially leaving some capacity un ...

Article

AI inference is the next network stress test

When people think about AI infrastructure, they usually focus on large language model (LLM) training. But training is only the op ...

Article

A look back: Ciena’s top milestones of 2025

From 1.6T becoming real to AI reshaping how networks are built and run, here’s a look at the milestones that defined Ciena’s 2025.

Article

A well-defended and resilient security operation: ...

When it comes to cybersecurity, the finish line keeps moving. Threats get faster, rules become more complex, and the expectations ...

Article

Scaling transport networks - The transition to Se ...

While legacy transport technologies such as MPLS-TP, RSVP-TE, and LDP have served well in the past, it is Segment Routing that is ...

Article

Beyond bandwidth: How Ciena Services keeps neosca ...

Neoscalers excel at delivering massive GPU clusters and LLM platforms to tackle the toughest AI compute challenges. But what abou ...

Article

How Ciena builds trust through a “confidently com ...

In today’s fast-paced digital world, trust isn’t just important; it’s essential. In this blog, Ciena’s Global Head of Security Go ...

Article

Set yourself up for AIOps success with clean netw ...

AI promises to significantly enhance network operations. But is your network data clean enough to provide a solid foundation for ...

Article

Key innovation in Passive Optical Network (PON) t ...

PON has seen a significant evolution over recent years, Ciena’s Wayne Hickey reflects on an exciting new area and data center out ...

Article

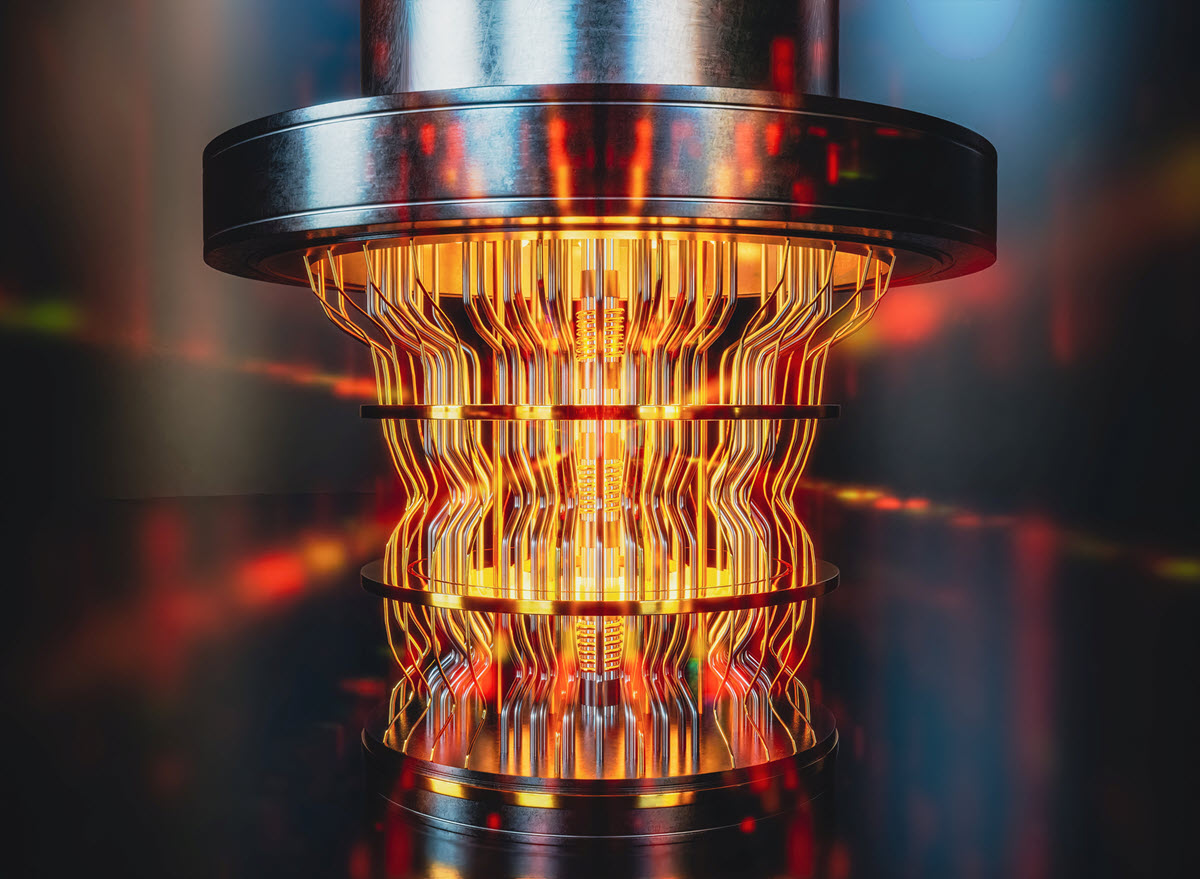

Shaping the future of long-distance quantum-secur ...

Paulina Gomez speaks with Farzam Toudeh-Fallah, who leads Ciena’s Quantum Communications R&D team, about the need for quantum-sec ...

Article

Optical networks: Powering AI innovation for neos ...

Neoscalers are rising as a new class of network operators—at the forefront of the AI revolution with unique challenges and opport ...

Article

Introducing Waveserver E-Series: Purpose-built fo ...

We’re expanding the Waveserver family with the brand-new Waveserver E-Series—compact, energy-efficient platforms designed to exte ...

Article

AI is rewriting the rules of networking: What it ...

AI is redefining the way operators of all kinds design, scale, and operate their networks. Ciena’s Brodie Gage explores how optic ...

Article

Enterprise AI is booming. What does it mean to se ...

As enterprises race to turn AI into growth and a competitive advantage, Ciena’s Francisco Sant’Anna explains how service provider ...

Article

Securing critical data in the quantum era: Why ac ...

Quantum computing is closer than we think, promising breakthroughs in healthcare, finance, and beyond. However, it also threatens ...