It's time for a new interconnection model for the growing amount of data in motion

Over the next five years, we're going to see a massive surge in digital data. The amount of new data created will be more than the amount created over the past ten years combined.

In fact, IDC estimates there will be approximately 291 zettabytes (ZB) of digital data generated in 2027, which is 291,000,000,000,000,000,000,000 bytes (Source, IDC Global DataSphere Forecast, 2023-2027, #US50554523 April 2023).

This much data equates to watching the entire Netflix catalog 663 million times.

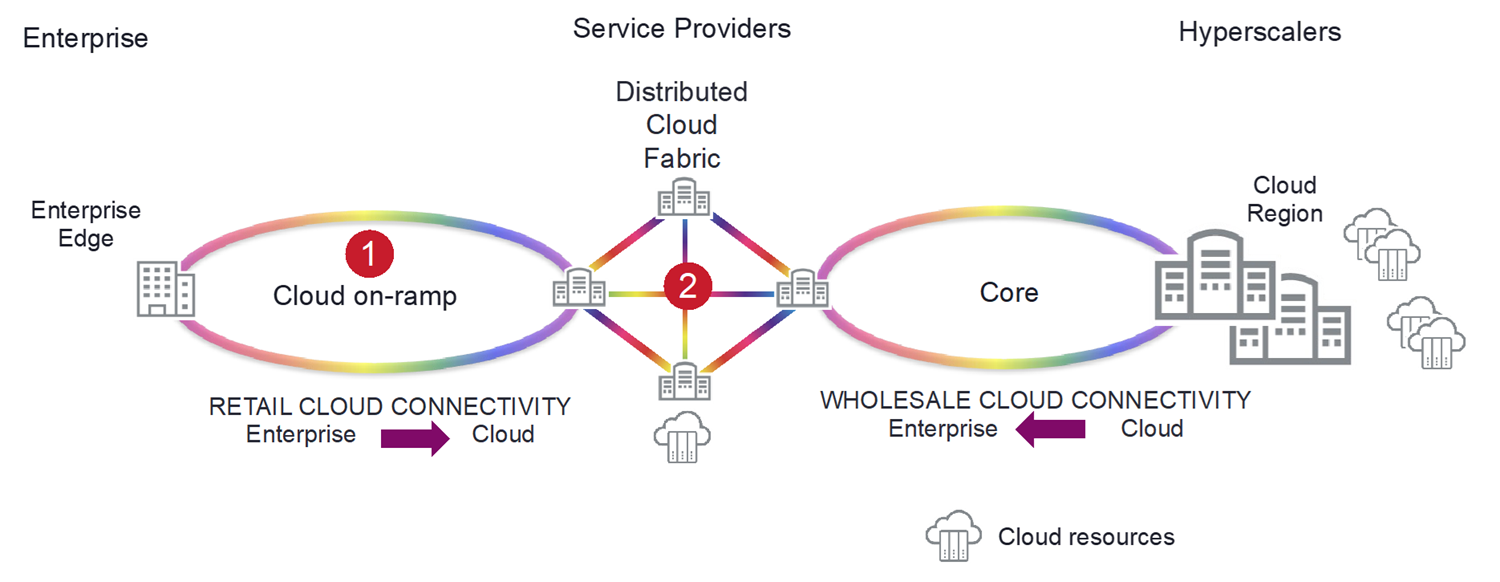

In the first blog in our series, we covered many of the drivers for this demand in data, as well as a cloud architecture where service providers sit at the intersection between enterprises and hyperscalers.

How Cloud is Changing Enterprise Connectivity Requirements

It is estimated that only a small portion (approximately 10%) of data is newly captured or created. IDC estimates that less than 20% of data generated globally in 2022 was generated in the cloud.

Cloud-based services have seen huge increases in demand because they make it simple and fast for enterprises to process and get insights from data. However, getting data in and out of the cloud is becoming increasingly complex as the amount of data grows, adding friction to the process, which has played a role in why only a small amount of data is in the cloud today.

Digital transformation with cloud-first applications is a complex transition, there are many complexities that need to be considered when transitioning to a new operating model. Due to this complexity, it is difficult to predict how much connectivity an enterprise will require to support its digital cloud transformation, and is usually an afterthought.

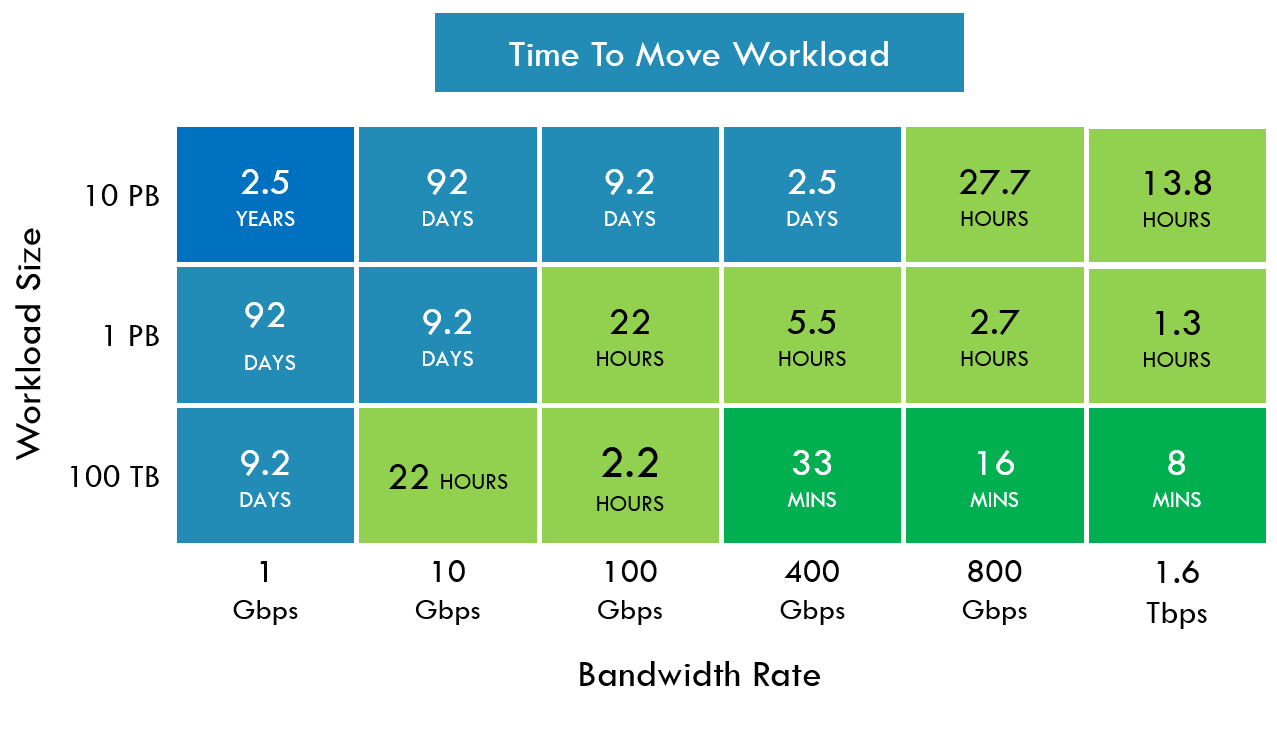

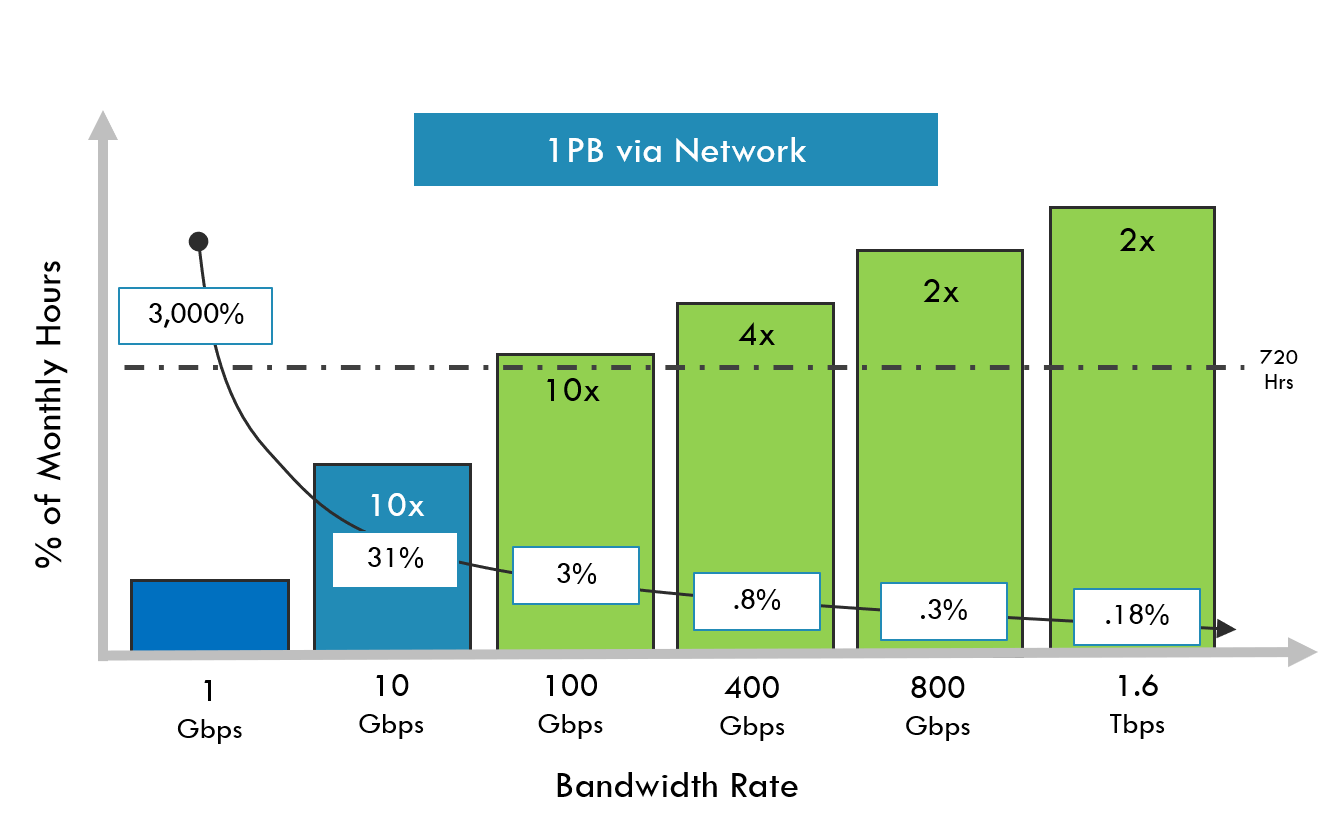

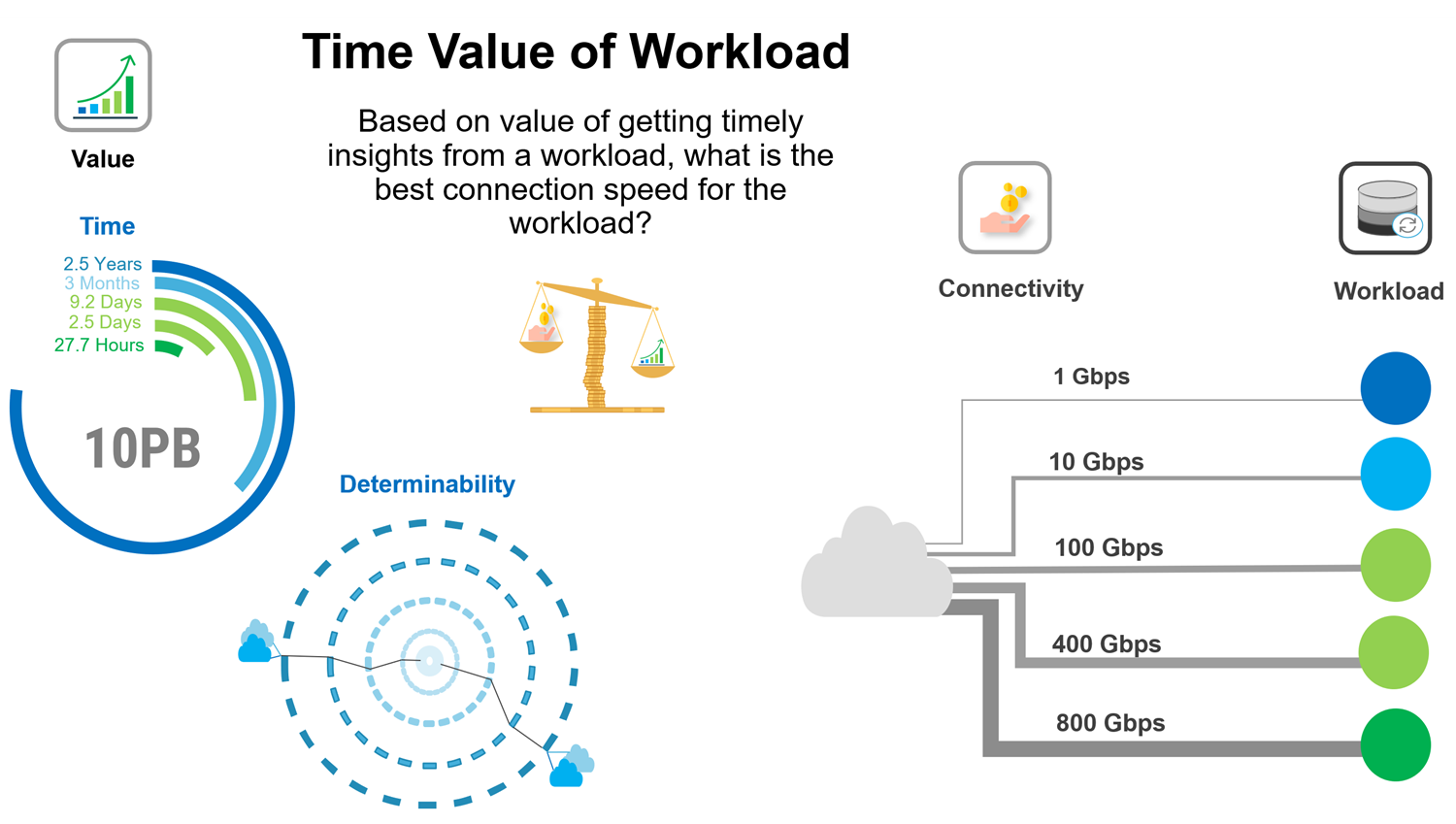

To illustrate, the following table provides an example of the time to move a workload over a network based on the connection bandwidth, and shows the % of monthly hours that would be required for a 1 petabyte (PB) workload at different bandwidth rates. As the bandwidth rate increases, the peak-to-average ratio also increases, as workloads can move much faster, which has the benefit of less time. However, this comes with a higher cost, as it requires a larger amount of bandwidth end-to-end to get the time benefit.

As workload requirements become more demanding, connectivity requirements will also become more demanding, requiring a higher-performance connection. High-performance cloud connections typically require working with network providers, colocation providers, and cloud providers to establish an end-to-end connection. An enterprise will have to make long-term commitments with a higher monthly cost to establish end-to-end connectivity, which becomes challenging when an enterprise doesn't have predictability for the appropriate amount of connectivity to meet the business requirements over time.

Having more optionality when it comes to acquiring secure high-speed cloud onramps would help make it easier for enterprises as they begin their cloud transformation and help accelerate the time on their journey. Service providers are well positioned to meet this need but should consider some changes to their edge architecture and commercial models to best meet the business challenges that come with demanding workloads in a hybrid multi-cloud architecture.

How optical innovation is changing secure cloud onramps

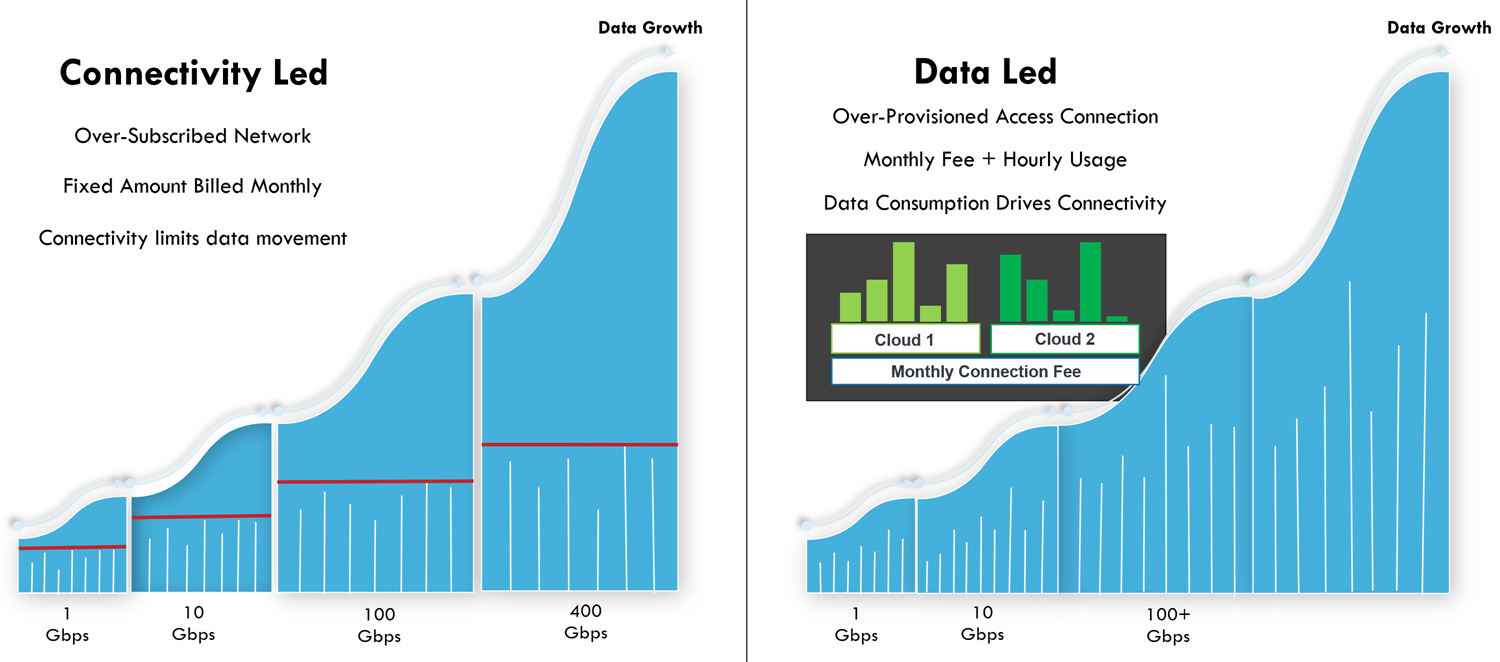

Today, connectivity and cloud onramps are typically offered in fixed bandwidths, and the workloads get bound by the provisioned bandwidth. This provides predictability to the Service Provider when capacity planning to maximize their infrastructure investment, while still meeting this customer Service Level Agreement (SLA), but limits the ability for a service provider to capitalize on available idle capacity.

Optical networking has evolved to the point where exceptionally large amounts of data can be moved in a space, power, and cost-optimized system. Coherent optical transmission enables the transport of terabits of data per second (Tb/s) to maximize the value of a single fiber pair. Where both the packet and optical planes are becoming more tightly integrated, it is creating an opportunity to maximize workload movement for the greatest efficiency, i.e., not all workloads have the same value, but dedicated cloud connections will ingress and egress at specific locations, thereby providing determinability when provisioning connections between the customer edge and the cloud edge.

By being able to over-provision high-speed, low-cost dedicated access connections to the cloud interconnection location, you can begin to imagine a new model where secure high-speed optical connections coupled with elastic logical cloud connections can quickly respond to changing workload requirements. A Service Provider would be able to provide the enterprise with a new option for cloud connectivity based on the Time to Value of a workload. In other words, shift the time/bandwidth paradigm of getting insights from demanding workloads by being able to schedule larger amounts of idle capacity to move workloads into the cloud faster, shifting time to insights from months to days, days to hours, and hours to minutes.

Is the time right for a new interconnection option?

With rapidly growing amounts of data, a growing demand for cloud services, and innovations in optical networking, the market demand and technology are here.

With improved cost-per-bit economics, coupled with connection determinability of where workloads need to ingress and egress the network, the last missing piece of the puzzle is to bring the service provider and cloud provider connections consumption models into alignment.

Currently, most service providers offer a “connectivity led” consumption model that is based on an over-subscribed network architecture, which restricts data movement to the amount provisioned. It provides predictability for both the enterprise and the service provider, but it limits monetization of the service.

Ideally, what we need for cloud onramps is a “data led” consumption model that allows a customer to request more capacity from the network when needing to move workloads faster. Moving the workloads faster would also free up more idle bandwidth over the cloud interconnection ports to sell to other customers, who would like the same ability to move workloads faster into the cloud.

Over-provisioning the last mile with coherent networking into a distributed cloud fabric removes the bandwidth constraint of the last mile and reduces the complexity of connection scheduling, while allowing the same optical connection to be leveraged for multiple cloud interconnections sites that are usually located within the same collocation facilities.

It is my belief that technology and economics have evolved to the point where developing an edge cloud interconnection architecture to support a “data led” consumption model is a viable option. This would enable new use cases for those who have made cloud an integral part of their digital transformation strategy and require a frictionless option for secure elastic, high-speed, hybrid multi-cloud connections.

With global data rapidly growing and cloud consumption expanding alongside it, it is vital to make sure enterprises have options that meet their changing business requirements, rather than constraining them. By working closely with our strategic partners, Ciena is working to help our customers change their networks to capitalize on the growing influence data will have on networks of the future.