How much bandwidth does Broadcast HD video use?

For example, broadcast video transport from broadcast venue to studio, studio/live broadcast center to studio/live broadcast center, or studio/live broadcast center to a video head-end or satellite distribution point. Additionally, in film and video post-production applications, native video content can be distributed over the network among video postproduction teams.

So how much bandwidth does an HD video stream actually use? The answer is simple: It depends…

As you’ll see as we go through the calculations, there are a lot of variables that can affect how much bandwidth a broadcast HD video steam actually uses.

Uncompressed HD: The Future of Broadcast Video

Most people are familiar with video formats such as MPEG-2 and H.264. These are video standards defined by the Moving Picture Experts Group (MPEG) that set standards for audio and video compression. The goal of these compression tools is to reduce the bandwidth requirements of a video file or stream as much as possible while degrading the quality of the video as little as possible – and they accomplish this goal to varying degrees.

In the not-too-distant past, these types of compression tools were used by broadcasters to transmit source video to distribution facilities. In a non-HD world, it didn’t really matter if a little video quality was lost.

Increasingly, however, broadcasters prefer to transmit their HD video content – especially live content – in an uncompressed state (see Broadcast video having its own network revolution). For example, broadcasters like ESPN or Discovery transmit uncompressed HD video to the pay-TV service provider to ensure they deliver the best video quality possible. What the pay-TV provider does in compressing that video before sending it on to their customers is up to them.

Simple Math, Big Pipes

The math required to calculate the digital bandwidth requirements for uncompressed video is relatively simple, and all you need are three parameters:

- Parameter 1: Color depth. This is also referred to as bits-per-pixel or bpp, and defines how many colors can be represented by each pixel in the video. For example, a color depth of 1-bit is monochrome, either black or white, while 8-bits can generate 256 colors. Most professional broadcast cameras have a color depth of 24–bits or more per pixel, which is considered “Truecolor” with over 16 million color variations. However, some professional cameras use a technique called "chroma subsampling" to reduce the number of bits needed (and thus the bandwidth required) to achieve a full spectrum of color. For example, chroma subsampling can reduce the bpp from 24-bits to 16-bits without a visible effect on video quality. While this is technically a form of compression, even some top-end professional cameras use chroma subsampling with raw video.

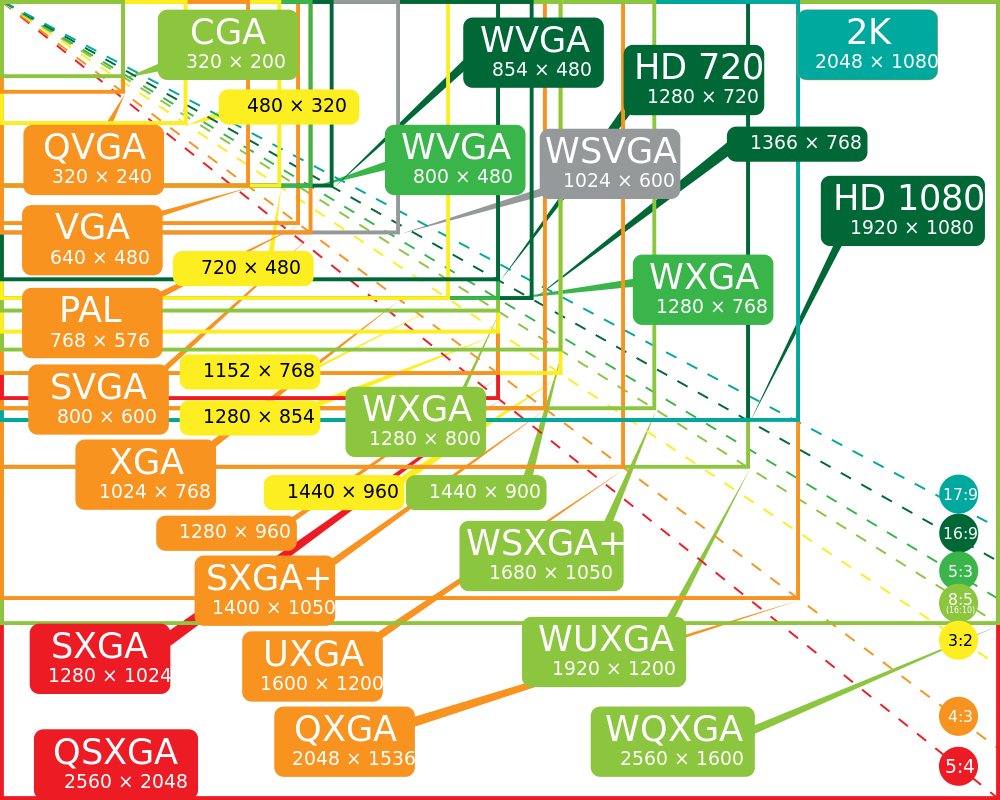

- Parameter 2: Video resolution. This is measured by the number of pixels wide by the number of pixels high of a video stream. HD video is generally defined as having a resolution of at least 1280x720. But as the below chart shows, there are a wide variety of available video resolution formats. 1920x1080 is another common HD video resolution. However, with broadcast video these resolutions are increased to create kind of buffer zone around the edges of the video. This is sometimes called "overscanning" and done to account for small differences in the way TV screens display the broadcast signal. So a video resolution of 1920x1080 becomes 2200x1125 and 1280x720 becomes 1650x750 for uncompressed HD broadcast video.

- Parameter 3: Frame rate. This is the number of “still images” or frames per second (fps) sent as part of the video stream. Broadcast HD is transmitted at a rate of 59.94 fps in North America, and 50 fps in Europe. However, you also need to account for possible "interlacing" of the video, which is a way of sending only half of the video frame at a time, either the odd rows or the even rows of the image. This effectively reduces the number of "full" frames sent per second by half, and likewise cuts the bandwidth requirement in half.

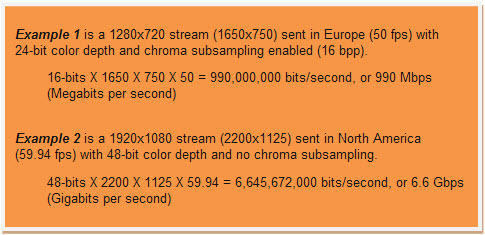

Assuming you know the three above parameters, you can easily calculate the total bandwidth requirements for uncompressed HD video. Here are a couple of examples of how the calculation works:

In the two above calculations, Example 1 represents the low-end bandwidth requirement of an uncompressed HD video stream. Example 2 represents the high-end bandwidth requirement, which is a significant 7X the total stream size.

One possible additional parameter is 3DTV. 3DTV is achieved by sending two video streams, thus doubling the above calculations. That means a single uncompressed HD-quality 3D video stream could require as much as 13 Gbps of bandwidth.

As you can see, these are huge amounts of bandwidth. That's why many times even uncompressed broadcast HD video is interlaced (for example 1080i which would cut the total bandwidth requirement in half) or uses chroma subsampling (which would cut the total bandwidth requirement by one third).

With so many variables involved, the industry has moved to develop standards to simplify broadcast HD video transport. The Society of Motion Picture and Television Engineers (SMPTE) has developed several standards. HD-SDI is a serial digital interface standard for transmission of HD-quality video over a 1.5Gbps link (e.g. using 1080i and chroma subsampling), and 3G-SDI is a standard for a 3Gbps link for use with HD video applications requiring greater resolution, frame rate, or color fidelity than the HD-SDI interface can provide.

The move to fiber-optic transport

Combine these high bandwidth requirements with the obvious need for a very reliable network that can transport the video stream across sometimes very long distances, and you quickly start thinking about the need for optical transport.

Indeed, there are already service offerings from carrier network operators that target this very market. For example, Level 3’s Vyvx video service uses the carrier’s extensive fiber infrastructure to support video transport in a variety of formats, including uncompressed HD at up to 3.0 Gbps.

Ciena has also tailored a solution for the video transport market. Ciena’s 565, 5100 and 5200 platforms are capable of transporting uncompressed standard definition and HD video across distances from metro to ultra long-haul. Network operators like Lightower Fiber Networks are already using our video transport solution to provide video delivery services to a major U.S. broadcaster on their network (see Ciena Selected by Lightower Fiber Networks to Deliver Digital Video Transport for Major Broadcast Company)

Keep in mind that the native-quality HD video that is created by broadcasters usually isn’t delivered to your TV in uncompressed format. Most consumer TV content is significantly compressed to reduce the total bandwidth required as it travels to the end user. The same holds true for over-the-top (OTT) video services like Netflix, and also for mobile broadband video. But as bandwidth becomes more plentiful and more affordable, the applications for uncompressed HD video continue to expand.

In fact, completely different industries are now increasingly requiring uncompressed HD video to accommodate new image intensive applications. This includes the healthcare industry where image quality is critical for new applications such as remote diagnosis and even remote surgery.

----------------------------------------------------------------

[Editor's Note: Oct 18, 2011 - This blog entry has been updated since its original posting. When I lightheartedly wrote that the answer to the bandwidth requirements for HD video is "it depends," I had no idea how right I was. Many thanks to the extensive feedback I've received in the HDTV and InternetTV discussion forums on LinkedIn, where much of the feedback from those group members helped me create a more accurate representation of the bandwidth calculations for HD video.]