How to ensure your Network Analytics Solution Can Drive Intelligent Automation

Today, many vendors are offering big data analytics solutions for service provider networks. If you’ve done some research on analytics, you know the basic processes involved – data collection and processing, identifying data patterns, making sense out of these patterns, and then using these insights to take actions that address a business need.

In response to today’s increasingly complex services ecosystem where network operators are under significant pressures to cater to the fast-changing needs of their customers’ service demands, an intelligent automation approach is appealing to a growing number of network operators.

But if the goal is to use analytics to enable network automation, what important features and attributes should you consider before selecting an intelligent automation solution? In order to answer this question, let’s walk through the various steps involved in the analytics process.

1. Data collection and processing

Have you heard of the ‘three V’s’ of big data analytics? These are 3 important factors needed to understand and act on data collection and processing. They are: Volume, velocity, and variety. Volume is the V most people associate with big data because the size of the data is many orders of magnitude larger than traditional datasets. This usually means multiples of terabytes or petabytes. Velocity refers to how fast the data is added to the dataset. Variety refers to the different types of data that need to be collected and managed. For network operators, that data can come from multiple sources and arrive in multiple formats like location data (mobile users, for example), OSS billing data or network telemetry. This data must be efficiently collected and stored somewhere for processing.

Key Considerations: Data can be very different depending on where it originates, and much of it is unstructured. This means it will not fit neatly into fields on a spreadsheet or in a database. The vast volumes of data are stored in what are known as data lakes, which keep the data in its raw format under a flat hierarchy until needed for analysis. To perform an analysis, the data is read directly from the data lake and transformed on the fly using distributed processing frameworks like Apache Spark and Hadoop. Bottom line, given the inherent difficulties associated with managing the velocity, volume, and variety of that information, the analytics solution should provide a robust data clustering framework with the capacity to rapidly ingest, normalize, categorize, store, and analyze data.

2. Identifying and understanding data patterns

Big data by itself are meaningless. In order to unlock its true value and use them to drive smart business decisions, network operators need efficient tools to translate heterogeneous data into meaningful insights. This involves advanced machine learning algorithms that can do predictive or prescriptive analytics, using a variety of techniques ranging from classical probability and statistics to modern deep learning.

Key Considerations: An effective analytics solution should support a rich library of advanced analytics algorithms that allow network operators to extract various levels of information – ranging from hindsight all the way to foresight. However, successful implementation of analytics to solve a business problem also depends on combining expertise from multiple fields, including data science, software engineering, storage, network operations, and systems integrators. The ideal solution will foster an environment for effective collaboration among all these professionals. An ideal solution would use a DevOps approach to network analytics with a robust ecosystem and framework for collaboration among experts and cross-functional teams.

Successful implementation of analytics to solve a business problem also depends on combining expertise from multiple fields, including data science, software engineering, storage, network operations, and systems integrators.

3. Making Business Decisions and Letting the Network Act - Automatically

After extracting meaningful information from the network, the final and most important step is to take action. For the action to be automated, the intelligence from the network must be fed directly back into the operational and functional processes.

Key Considerations: Intelligent automation requires an extensible and open architecture that enables gathering and translation of network intelligence into specific actions. This means the analytics solution should allow for easy integration with policy systems that govern the integrity of the network and actionable systems like multi-domain service orchestration systems.

The analytics solution should allow for easy integration with policy systems that govern the integrity of the network and actionable systems like multi-domain service orchestration systems.

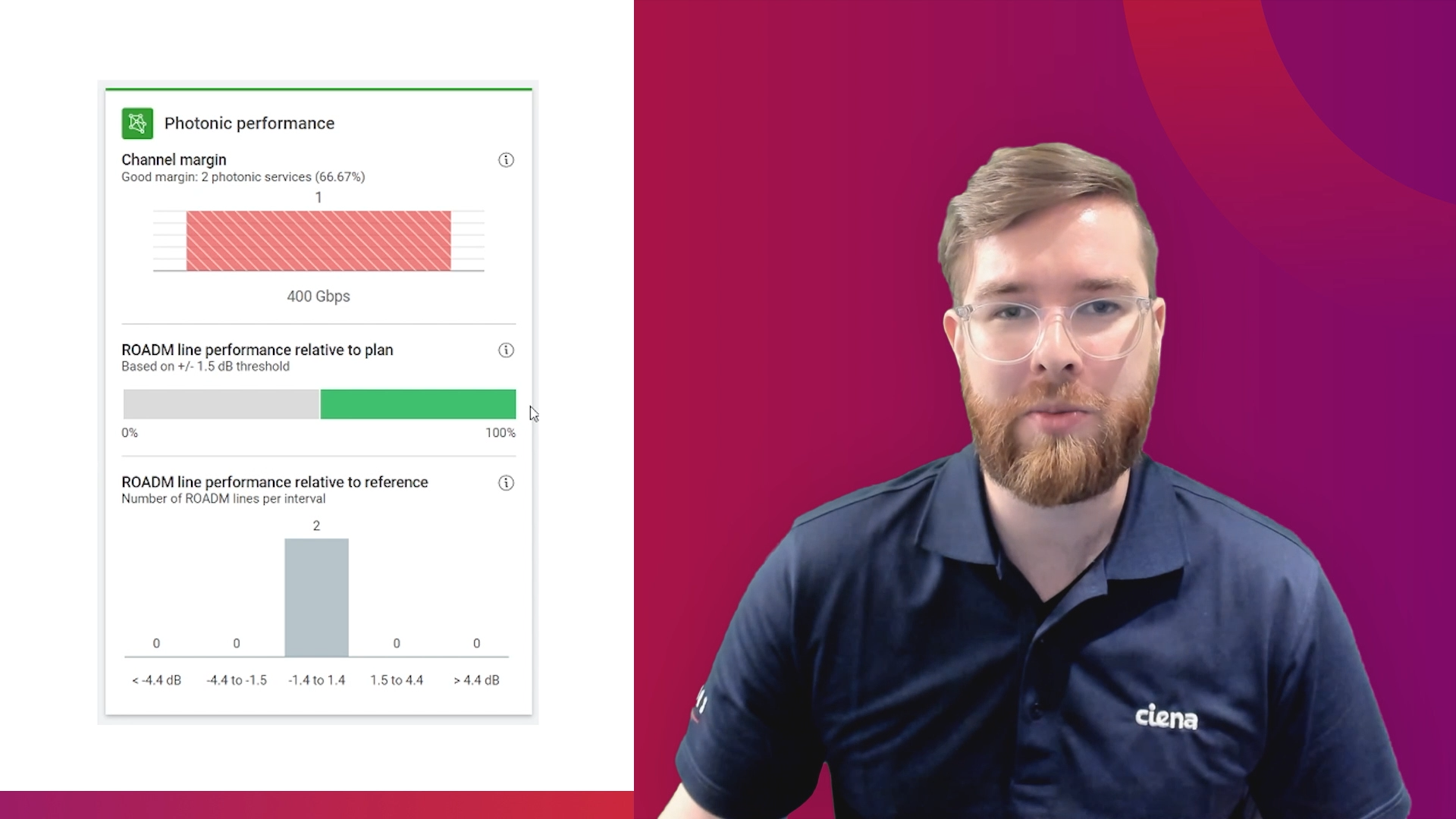

Ciena’s Blue Planet software suite and Blue Planet Analytics (BPA) solution provides a unique combination of the above attributes and more. If you’d like to explore further, please download this white paper, Making Intelligent Automation a Reality with Advanced Analytics. In this paper, you will also find examples of real-world network analytics use cases that take advantage of these powerful attributes.